How to Select a Safe and Secure AI Tool

The use of artificial intelligence (AI) is currently raising some serious security and privacy concerns from governments, businesses, and special interest groups as they strike a balance between progress and risk.

We think this is just them playing catchup with a vastly evolving technology that has been around in one form or another for over twenty years.

When we think of AI, it seems all anyone talks about is ChatGPT. However, it’s not the only AI tool out there. In reality, there are a large number to choose from that address different usage cases. Once you start to review them you find that they are not as complex as you first thought. You just need a structured approach to assessing them.

We are not recommending any specific tool in this blog but to keep things simple, we will use ChatGPT as our example AI service, given it is well known, fully formed, and already deeply involved in lots of discussions around security and privacy.

What is ChatGPT?

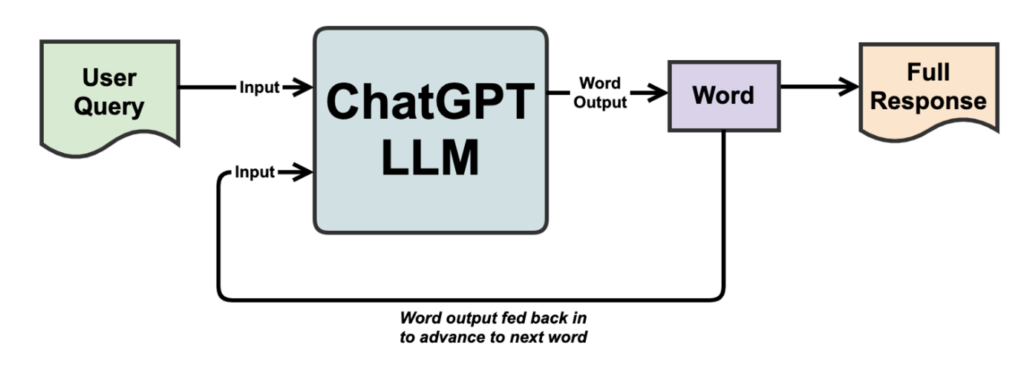

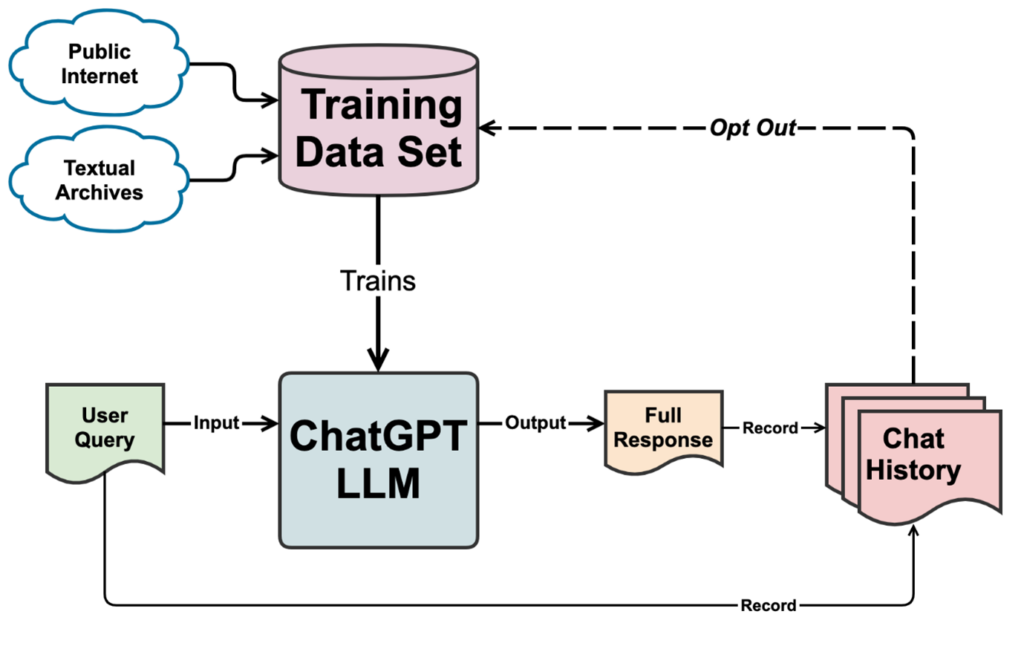

Before we get into how to use ChatGPT, it is useful to know what it is. ChatGPT is an AI model which generates a stream of text output based on a stream of text input, running it through what’s called a Large Language Model (LLM) which is a big neural network. If you have ever used ChatGPT, you will have seen it progressively print out its response a word at a time, like a modern-day equivalent of a punch tape printer or teletype.

The Large Language Model in ChatGPT gets its power to sensibly predict the next word needed from the size and depth of the neural networks employed in its structure. This, combined with advanced training algorithms and a very large training data set, means ChatGPT can discover concepts and knowledge at a greater depth and variety than previously thought possible. But equally, this also means ChatGPT can derive invalid relationships and look convincing in its responses.

ChatGPT’s strength also comes from the breadth of what it has been trained on. What that is exactly is not clear (most likely kept secret for business reasons) but it certainly involved large public data sets like the internet and other ‘textual corpuses’ such as Wikipedia. Of course, such data sets have differing degrees of complexity, correctness, and bias, which can lead to some strange behaviors and interesting ‘right-to-use’ cases.

4 things to consider when selecting and using AI tools

Selecting the right subscription model

There are several ways of working with and accessing AI systems. Again we’ve chosen ChatGPT and the various subscription models that exist for personal and business use.

Free subscription

With the free subscription mode of access, you do not pay to use the tool, rather you login with an account and interact with the AI tool directly. With ChatGPT this is a conversation-based interaction, you write a query, it responds and this repeats until you are done. Note: the model won’t prompt you, it’s purely driven off your interaction first.

This model of usage lends itself well to one-off tasks, such as creating job descriptions, creating sales and marketing content, textual analysis, analysis of sentiment and the analysis of code fragments.

It’s important to note that each series of interactions (or chats) you have with ChatGPT form a session, in that ChatGPT remembers the previous interactions in that session to help shape its future responses. The interactions in one session cannot influence a different session.

Something to keep in mind is that those interactions can be used by OpenAI (the company behind ChatGPT) to train the model going forward, so what you enter could eventually be discovered by someone else. Samsung found this out the hard way earlier this year when two of its engineers shared code with ChatGPT and one employee uploaded an entire confidential meeting into ChatGPT. As a result, Samsung issued a temporary ban on the use of AI on company-owned devices used by its employees.

ChatGPT has now made it possible to delete old chat sessions and therefore remove them from being potentially used in the training set, but by default anything left can be used for training. To stay safe, it’s best practice to remove any commercially and personally sensitive information from any queries you make. It’s also best to use a corporate email account, rather than mixing personal and business usage.

Paid subscription

For a monthly fee users can pay to have access to ChatGPT via an API or login using one set of credentials. This allows you to integrate ChatGPT into a singular product or service and as such, this mode of access is focused on developers, small businesses, and startups.

The main difference to the free plan is an increased number of queries a month (30,000 compared to 1,000 with the free subscription) and whatever queries you make to ChatGPT will not contribute to the training set; they are kept private just for you (remember, do not opt in).

While this is an improvement from a privacy point of view, it’s worth remembering that ChatGPT operates outside of Australia. Consider how this could impact your data usage rights and privacy policy, applying the same level of caution as with any other supplier. After all, the breach of an AI supplier could be equally as damaging as any other type of third party vendor.

Read more: How to Minimise your Data Risk: 6 Steps to Assess the Security of your Suppliers

Enterprise licensing

The enterprise licensing model allows you to create and maintain multiple logins and API credentials under the one master business account. Ideal if you expect employees to use ChatGPT directly or have multiple products you wish to use with ChatGPT.

The main difference to the paid subscription is that the number of queries per month have jumped to 500,000 and you have access to all their language models (different versions of the neural network). As before, the queries you make will never contribute to the training set, unless you opt in. And as with the free and paid subscriptions, your data is not stored in Australia.

Be aware of the limitations of each subscription model and determine which one fits your usage case.

Private GPT

Whilst not a licensing model, Private GPT is a free, stand-alone, open-source large language model. It’s not as powerful as ChatGPT but offers a number of benefits. For example, you can host it in your local services and maintain control over security and privacy. Private GPT comes with a basic training set but the best usage case is that users can upload specialist data particular to their business. It then becomes their Intellectual Property and can evolve over time.

Like us, you may use various combinations of subscription models depending on the nature of the data, the security required and the AI functionality that meets your usage case(s).

Can you trust the results of AI?

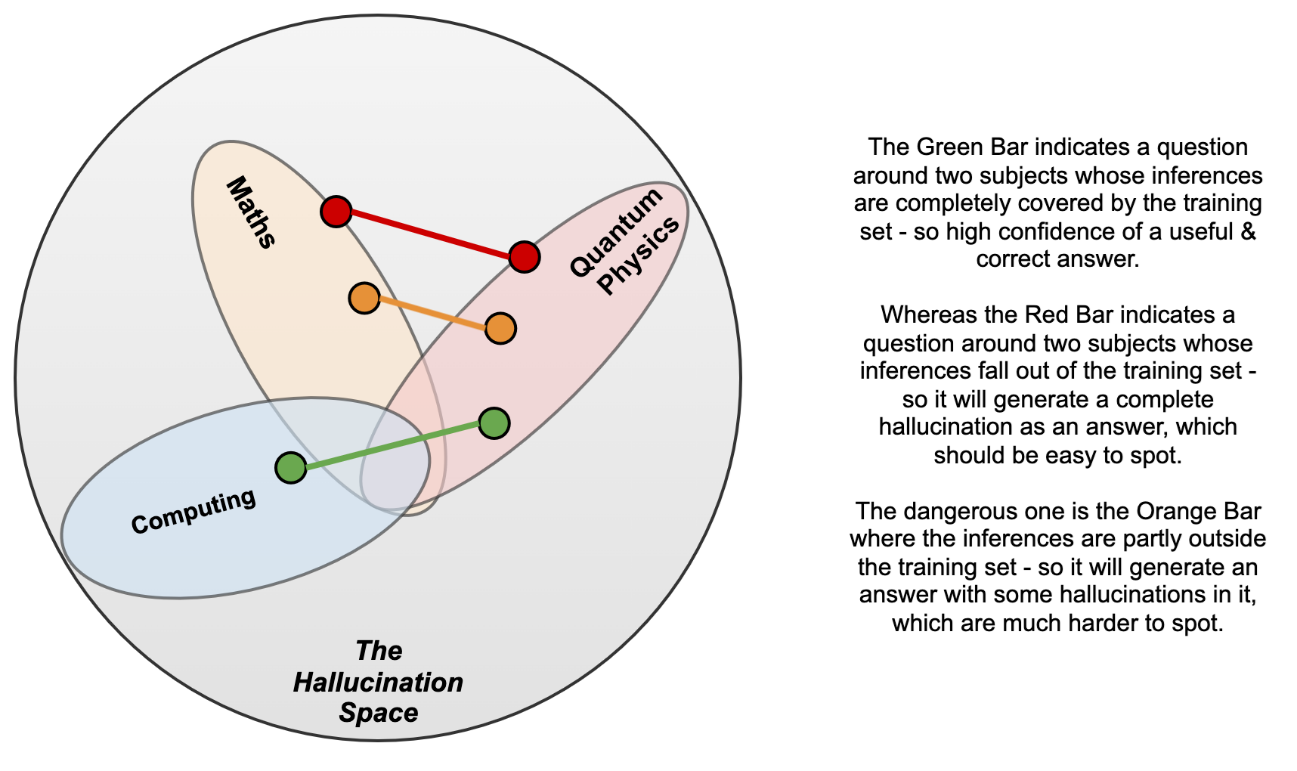

The accuracy of AI is a big topic of conversation and there are several factors to consider. Firstly, AI can hallucinate. A hallucination is when AI produces responses based on combining facts or conclusions in a way which is incorrect. This often occurs in areas for which there is either insufficient base information or there is too much competing information so it contradicts itself.

The trouble is that there is no way of detecting or flagging a hallucination – the results look as correct as any other. You must confirm what the AI is telling you is accurate, because assuming it is correct could be dangerous.

Case in point, two lawyers in the US got caught out by ‘AI hallucinations’ when using ChatGPT to discover legal precedents that turned out to be fake.

Be aware of legislative requirements

As mentioned above, governments are in the process of developing legislation to reduce AI risks. Given AI has been used by businesses for over 20 years, this might be considered being rather ‘late to the party’ but the previous uses of AI were more constrained.

With AI interacting directly with the public, we need to ensure safeguards are in place to protect privacy, provide safety and preserve legal rights. Let’s look at the proposed EU Artificial Intelligence Act as an example.

The EU Artificial Intelligence Act

Considering the explosive growth in the use of AI and the potential negative factors that can arise, the EU has moved quickly to put together a proposed AI Act to manage risks and simplify the delivery of consistent AI services.

The Act assigns the applications of AI (excluding for military usage cases) to three risk categories, with appropriate controls and restrictions:

- Applications and systems that create an unacceptable risk are banned, such as government-run social scoring akin to that being used in China.

- High-risk group applications, such as those which implement safety services, are subject to specific legal requirements.

- The last group, which consists of applications not explicitly banned or considered high-risk, are largely left unregulated.

The high-risk group controls are particularly interesting, in that the Act requires that such systems can be overseen by a person, or have constraints applied to them. In effect they cannot make automatic decisions without some form of human review. Further, the level of accuracy they obtain should be communicated to users and be subject to ongoing review to ensure quality.

Also, high-risk systems must maintain appropriate security controls, so they cannot be hacked and subverted. High-risk systems include biometric identification, critical infrastructure, safety systems plus a wide range of ‘personnel assessment & screening’ cases (training suitability, employees, recruitment, terminations, benefits, creditworthiness, law enforcement, lie detection, document verification, visa application and legal). Obviously, the EU is very concerned about AI automations that could disadvantage certain groups.

This means that HR and payroll systems fall into the high-risk category. While Australia has not yet developed an equivalent AI legislative framework, it’s wise to closely align all of your AI activities to the Australia Privacy Principles.

Copyright

With AI training sets based on large public data sources such as the Internet many are raising concerns about copyright infringement. AI can reproduce either exact extracts or very closely derived works from the original. This is part of the reason for the recent actors’ strike, as there are real concerns their likeness will be scanned and then ‘played’ via AI to provide a virtual performance, with the original actor being excluded from the process.

There are two main issues to consider:

- The AI generated text or images that you are using could be a copyright infringement.

- Your copyrighted text or images could be used by others, thereby infringing your copyright.

Managing the risks of AI

As we move into a greater usage of AI tools, it’s important to remember that they are a supplier and must be treated as such. Like any other supplier, it’s worth considering these steps to manage your risk:

- Conduct security reviews as part of the selection process

- Understand the usage cases, especially when it comes to the data exchanged

- Have the right security controls in place from the start

- Conduct regular reviews to check whether the usage case has changed and whether the data exchanged is as expected.

If you are intending to use AI systems in your business in a high-risk context, it’s important to have clear answers to the following questions:

- Will my queries be used as source data to train the AI models? If the answer is yes, you must remove sensitive information from the queries

- Have you tested the model sufficiently to have high confidence it will behave as intended?

- Are you able to regularly test to confirm ongoing behavior?

- What safeguards are in place to catch inappropriate responses?

- Do responses undergo human review before use?

- Are users informed that they are interacting with an AI system?

- Where is the AI system based? Does this meet the requirements of your privacy policy in regards to sharing information with third parties?

Finally, consider tracking your AI suppliers in your supplier register to safeguard your company against any claims made by customers and other parties that their data has been inappropriately used. Make sure to include the owner, the subscription model used, the usage case(s) and the data exchanged.

Want to keep up-to-date with ELMO’s content? Subscribe to our newsletter for first-look access to our free research, blogs, and resources.

HR Core

HR Core